Don’t Mention The War! A Great Utility Computing Deception

Let’s talk about something usually considered both certain and ubiquitous1. No, you fellow cynics, the answer here is not “war”, although we will predict a market war part by the end of this article. Our topic today is utility computing as exampled by Amazon Web Services, Microsoft Azure and Google GCP. And per the title of this article, you might be wondering why such a commonly understood and relatively easy to utilize service is deceiving? The great deception really has to do with its relative market maturity. “Woah! Slow down!” you might be thinking. “It’s a utility – what does market maturity have to do with it?”

Let me step back and start with what a utility implies in general. Most of us think first about our electric service. For example, I have an electric meter on my house that measures how much I pay my electricity provider based on how much electricity I actually consume in kwh or kilowatt-hours. My electric service also has a configured capacity constraint. I have a specifically sized feeder line (and fuses/breakers to match) that constrains the total electricity flow (in amps) that I can draw at any one time2.

In the ideal of utility computing we might imagine that it’s pay-as-you-go, that is, one pays for compute as a utility. And this is how cloud services today are often marketed and represented. We might imagine we are paying for each unit of compute that we actually use and that this usage is metered by something strictly defined and measured in a repeatable manner. We might think that as we use more, we pay more, but also that we only pay for what we use. Unfortunately that’s not exactly the current state of cloud computing. We aren’t paying cloud providers for a strict consumption of utility like, perhaps, the number of CPU-seconds that we actually consume. Cloud subscriptions are not billed in terms like our electric service meter’s kWh. Nor is our cloud computing delivered at an agreed-upon and tightly managed service level like our electric company’s commitment to providing a specific voltage.

You might be thinking “but we rent machine instances in our cloud by the hour, so maybe instance-hours is our utility metric?” And that leads to my point. The renting of a virtualized unit of potential computing is more analogous to renting a hotel room than to paying for a commoditized utility. At a hotel you’ll get charged the same amount regardless of how many hours you actually occupy the room. You get charged if you don’t use the room at all (and didn’t cancel in time). You can be charged even when you don’t show up and the hotel gives the room to someone else who also pays for that room. You are only assigned a specific and actual room when you check-in (and that takes some “provisioning” delay time). A provisioned room often doesn’t match with what you intentionally reserved. You can end up staying in a room smelling of smoke, covered in cat hair, facing the parking lot and with a plugged toilet. Your hotel “service” routinely overbooks and can give you a poor substitute (ever been “walked” to another nearby hotel?) or cancel your room reservation even if you’ve confirmed and prepaid.

Does this happen a lot in cloud computing? I’m convinced some part of this happens every time for every machine instance rental, unless one manages, in some act of supreme devOps, to run all their machine instances at 100% of their defined utilization all the time3 (assuming one can recover instantly from node failures in a cluster with 100% utilized nodes).

As another example consider airline travel. You can book a seat on an airplane, but it’s not really your seat. It’s a reservation that you pay for regardless whether you use it or not. You don’t pay for how you really utilize air travel, in units which might measure timely transportation over the immutable distance between airports, and perhaps measured in passenger miles delivered. You certainly don’t have a measurable contract for a guaranteed level of service in terms of your total required actual travel time, ease of boarding, smoothness of flight, behavior of other passengers, availability of snacks, etc. despite frequent traveler miles, club membership expectations and class upgrade marketing. You can always be reassigned seats and even preempted off the plane even after boarding. Many routes are intentionally overbooked because the airline can profit by planning on an expected number of no-shows.

So why is cloud computing today not more like a true utility? Certainly cloud providers can (and probably do) measure your actual under-the-hood resource consumption. But, of course, you can appreciate that cloud provider margins are so much bigger because like hotels and airlines they can sell reservations for potential compute hours instead of actual resources used. They make a ton of revenue on effective compute “no-shows” or more generally, the gross under-utilization of reservations4. This, in my consumer view of economics, looks more like a contracted product lease for potential usage than a utility service billed by actual usage.

As another example, this seems more like a car rental5 based on days reserved than a household utility billed mainly by units consumed. Even if you never pick up a rental car, drive cross-country, or just end up parking it at your airport convention hotel for several days, you pay the same. I’ve had my car rental agency run out of cars before I get to the counter many times despite pre-paying for a specific car reservation. They also clearly overbook during busy times to benefit from no-shows.

Capitalism is fundamental, so why am I complaining? Well, as shown, the client-side economics of using the product/service rental market are different than in a true utility/commodity service market. You pay more when you reserve/rent than when you pay for usage. You don’t benefit from commodity price competition (or regulation) in a utility market. And the service provided by a utility is tightly managed and guaranteed, standardized, comparable, fungible and usually governed. Cloud providers don’t want to actually be in the business of providing commodity computing – they like being able to overbook, charge for and be able to reallocate unused resources, substitute service levels, and preserve vendor lock-in based on unique functionality (“vendor gravity” if you are kind).

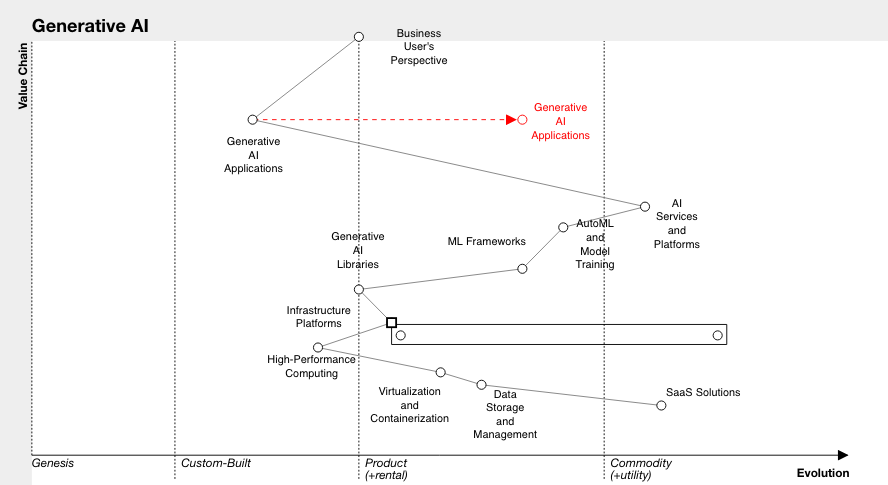

What will drive the market towards truer utility computing? Inevitably, market competition. I’ve been re-reading Simon Wardley‘s entertaining backstory and derivation of his Wardley Maps techniques. I highly recommend strategic business planning/mapping and the basic book is free. The full book might be a bit long for execs looking for quick or external advice (in which case, as Simon says, “I’m available for facilitation and consulting!”), but finally generating a fundamental market perspective and developing better6 strategic business planning based on your business-personalized “landscape mapping” is invaluable.

In Wardley mapping an early step is to rate each major component required to deliver our customer value by what we might call market maturity (following an evolutionary lifecycle) on a scale ranging from Genesis/R&D through Custom/One-Off, Product/Rental Service, and ultimately into Commodity/Utility Service. Note that the arbitrary naming of something like “utility computing” doesn’t necessarily mean it has already evolved into a utility service.

If you are doing Wardley Mapping on your own business strategy, you might pay particular attention to how you rate today’s cloud computing services that are marketed as utility computing, but may not actually serve you as a Commodity/Utility. In Wardley Mapping terms I might show this with a large “inertia” block standing in the way of its evolution (I might propose the block is mainly due to a lack of supply side competition). Your strategy mapping might or might not care about this positioning, but in general, such inertia blocks eventually give way to inevitable evolutionary forces, and that is where and when market “war” (in Wardley Mapping parlance) happens, full of sea-changes for entrenched vendors and offering game-changing emerging opportunities. A big future war that will happen in your value chain is something you should always consider in your strategic planning!7

To summarize, I believe there is an inertia block in the way of utility computing (as commonly known) from evolving into a truer utility service. That block is likely supported by deceptive marketing and monopolistic positioning. Inevitably that inertia block will crumble and then there will be market war. If your customer value chain includes “compute services”, do you have a strategic plan for when that happens?

- Certainty and Ubiquity are terms used in Wardley Maps when analyzing the rate of evolution of a value chain component. ↩︎

- Amps are a unit of current, a measure of how much electricity a device uses when functioning, while kilowatts are a unit of power (= amps x voltage). If you know the set voltage (pressure), which here in U.S. household outlets is managed by the electric company to 110V RMS (120 max V), then kilowatts = (amps_flowing x 110v)/1000 at any instant of time. Changing the voltage to a 240V circuit (e.g. for ovens, driers, welding machines…) means you will be using twice the power even at the same current draw. What’s important and what you pay for is the total power you use over time, nominally counted up in hours. Thus our basic unit of electric service is kWh or kilowatt-hours, one kilowatt of power consumed for one hour.

BTW, the ability of your power company to manage voltage to a target number with limited variation despite dynamically changing loads on widely shared power lines is one of the fundamental power engineering feats of magic 🙂

↩︎ - Next post I might review some basic facts about queueing-based Response Time, as I spent decades of my professional career consulting at F1000 companies to help capacity plan and manage big UNIX server “pets” back in the days of large scale-up datacenter compute. Ah, how quickly the market forgot how resource utilization relates non-linearly to performance just because today’s DevOps manages herds of cattle! ↩︎

- For several years I ran a F500 “server consolidation” planning consulting group. On average we’d find that well over 60% of any client’s datacenter servers were barely utilized at any one time. In some cases, 95% of deployed servers were in use less than 10% of the time, and some of that was in drawing fractal-based screen savers. The answer often wasn’t to work hard to stand down unused servers and their obsolete apps, but to migrate them as-is to VM’s so that they could be “consolidated” onto fewer, larger servers. The great irony of course was that these new pricey VM host-sized servers weren’t then actually hosting much real work even in aggregate, while VMware and PC suppliers like Dell made a pretty penny. ↩︎

- I haven’t fully considered the Uber business model, which presents a specific, although dynamically priced, charge per actual trip at the time you call it. To be fair, there is an auction spot price market at AWS too. And electric companies may offer a type of spot pricing to their largest of industrial users. Still, dynamic pricing doesn’t seem to change the fundamental unit of consumption in true pay-as-you-go services, it just dynamically changes the amount paid per unit based on current supply and demand. ↩︎

- I think I can safely summarize Wardley’s position as that one can’t even start in on actual strategic business planning without a map. ↩︎

- Again per Wardley, evolution goes through a cycle of wonder, peaceful competition, and then times of war, with war happening at the end of a cycle when big disruptive changes in a market are inevitable. Significantly, it’s not possible to predict exactly “when” this occurs (as evolution tends to exhibit a non-linear relationship between “certainty” and “ubiquity” and non-linear curves aren’t predictable), but it is possible to predict “what” is going to happen – in this case, war – essentially because such evolution is inevitable. ↩︎