Research, News, Interviews & Reports

What’s trending in IT technology?

SoftIron’s HyperCloud: Redefining Private Cloud Infrastructure

Unlike traditional approaches that combine complex IT components, SoftIron's HyperCloud stands out with its top-down methodology, ensuring seamless integration and simplified management. Original video (with full player and complete transcript)[…]

Read moreAdvancing Healthcare with AI: Ethical Considerations and Future Trends

In this webcast, you’ll learn how AI transforms healthcare delivery, patient outcomes, operational efficiency and its potential to revolutionize clinical decision-making, patient risk stratification, and population health management. Original video[…]

Read moreAlludo Parallels: Redefining Desktop and Cloud Integration for the Modern Enterprise

Alludo Parallels is a compelling alternative to established players like VMware and Citrix in the ever-evolving world of virtual machine management and remote desktop solutions. The discussion with Kamal Srinivasan[…]

Read moreA few things we’re publishing…

ANALYST-DRIVEN EXPERT INTERVIEWS

Want to know what

‘s happening with your favorite IT vendor, that latest startup or their newest solutions?

Browse hundreds of 1:1 interviews with key executives in IT, with more coming every week!

ENGAGING VIDCASTS/PODCASTS

Interested in the latest technology trends but don’t have time to wade through 500+ magazines?

Listen to archives of our podcast of the top 6 tech articles drawn from the IT Weekly Newsletter. No hype, no marketing – just the hand-picked ‘best-of-the-best’.

MARKET RESEARCH & SHOWCASE EVENTS

Looking for current, reputable research on trending IT topics or evolving IT Markets?

Whether an IT pro or vendor, our market research goes deep – teasing out valuable insights, with expert commentary and the occasional brash prediction. Check out our current research calendar for upcoming events!

INTELLIGENT EVENT/SHOW COVERAGE

Tired of tedious audio-over-slide webinar events?

Our expert analyst content connects and delivers on webinar events, product demos, executive interviews and customer testimonials.

Be sure to catch our live and streaming show coverage!

Market Research – Upcoming Topics

We host free kickoff and summary online events for every research track. Our research efforts include targeted vendor interviews, an “IT pro” survey and a thorough report on key findings and recommendations.

Working From Anywhere

Working from Home (WFH) for some became Working From Anywhere (WFA) for most. It’s now a hybrid world. How can IT best cope?

The Data Center Lives On

The IT Data Center still hosts and protects mission-critical apps and data. It’s also a key integration point between edges, clouds, services, and global users. What’s in store next for Data Centers?

Fighting Shadow IT

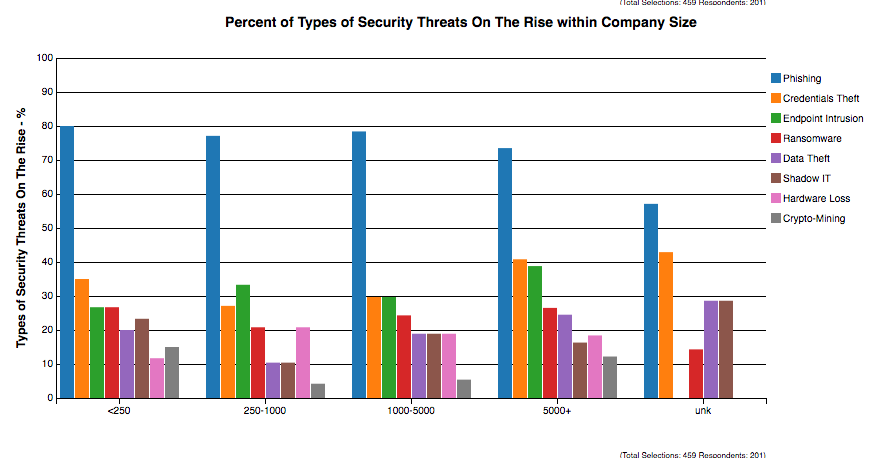

Shadow IT and increasing SaaS usage threatens GRC (governance, compliance, risk) and corporate integrity. It increases cost and risk. How can IT best identify and address Shadow IT?

AI For The Enterprise

?

How can IT best support productive AI innovation? Critical data is everywhere across the organization, compliance and governance is risky, and GPUs are expensive. Build AI IT, buy AI IT, or rent AI IT in the cloud?

New Frontiers In Handling Hot Data

Great leaps in performance like emerging network and storage offload cards, memory class storage and computational storage are coming along. But also potentially huge changes in how computing is done. Is IT ready?

Controlling Clouds, Cloud Controls??

Cloud adoption accelerated last year, so did IT challenges with hybrid/multi cloud use, data protection and SaaS management. Repatriation is an option, and hybrid ops are improving fast. Is cloud still the winning bet?

Interested in Research? Request more detail about our research program:

Don’t Miss Any of Small World Big Data!

Follow us on LinkedInIf you are an IT vendor, check out our Vendor Services