Data Stream Mining with Cube

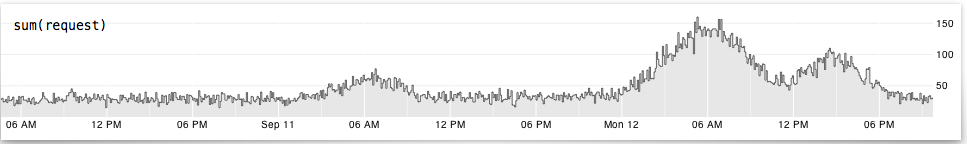

Time-series data analysis can be approached in two ways. Traditionally time-series data is aggregated into partitioned historical data bases, and then reported on at scheduled intervals. Commonly, reports delivered today cover data collected yesterday. A modern (and perhaps most relevant …