Cloudy Object Storage Mints Developer Currency

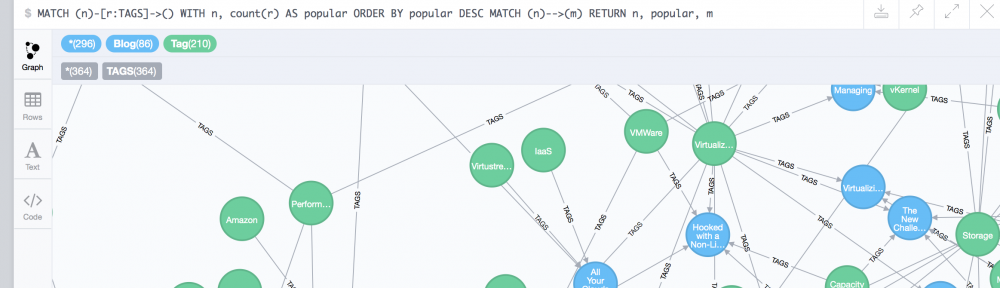

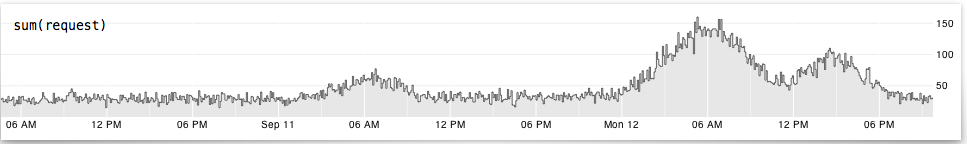

Having just been to VMWorld 2017 and getting pre-briefs for Strata Data NY coming up soon, I’ve noticed a few hot trends aiming to help foster cross-cloud, multi-cloud, hybrid operations. Among these are

- A focus on centralized management for both